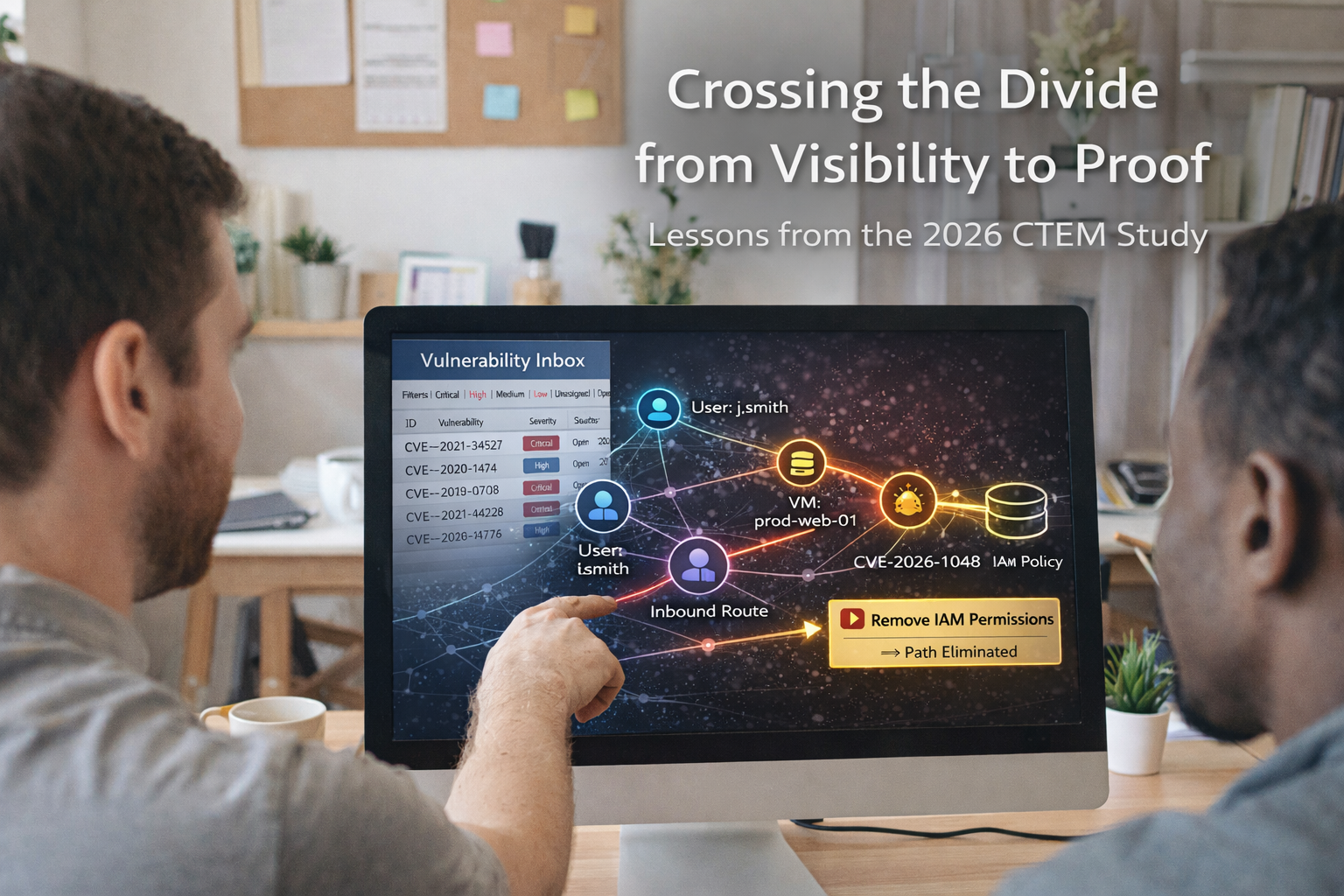

Lessons from the 2026 CTEM Study: Crossing the Divide from Visibility to Proof

A recent 2026 study highlighted by The Hacker News found that only 16% of organizations have operationalized Continuous Threat Exposure Management. Those teams report better visibility, stronger adoption, and measurably improved outcomes. What’s more, 87% of security leaders already believe CTEM is important. The gap, then, isn’t awareness; it’s execution.

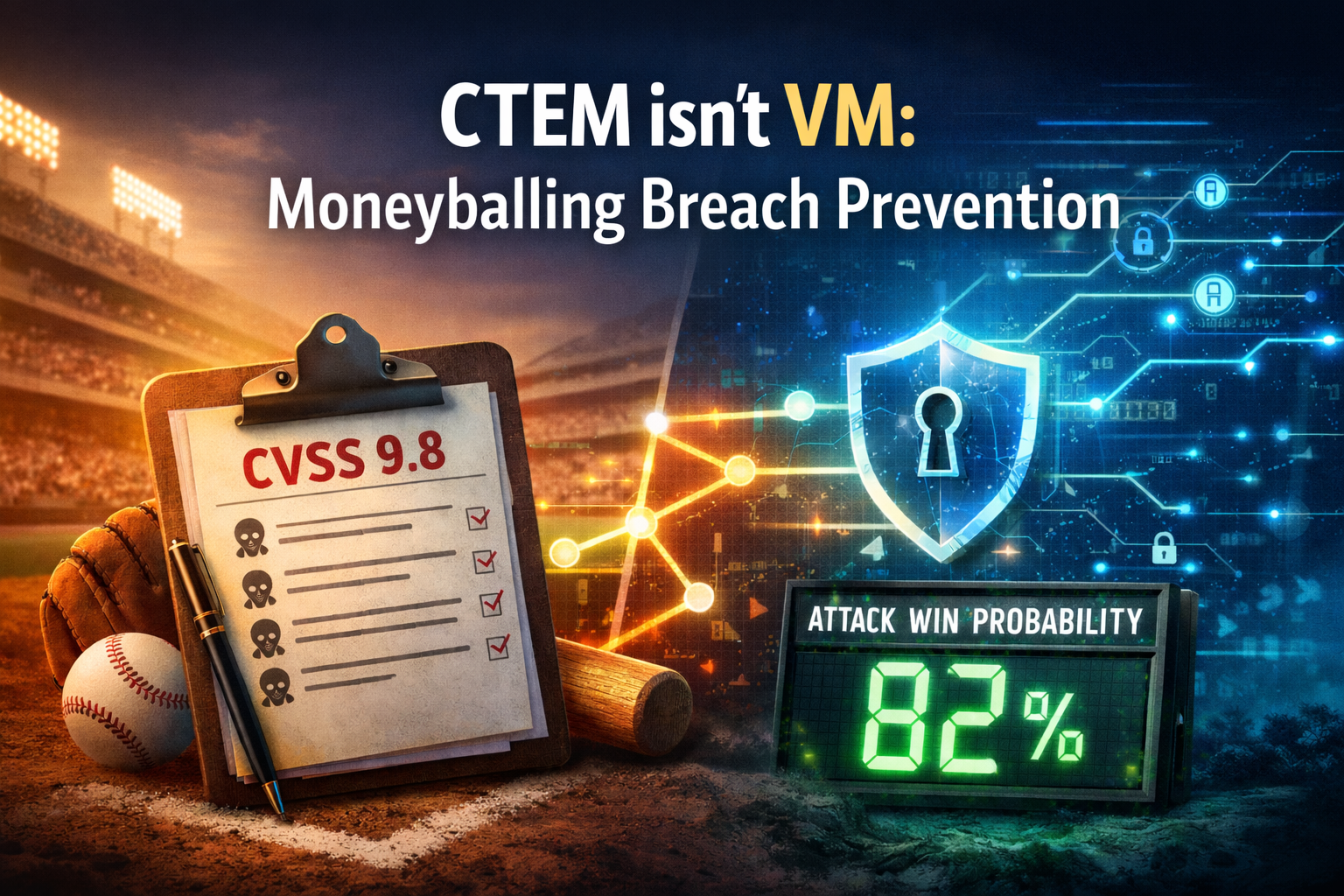

Most leaders agree with the philosophy. They know patch-everything approaches don’t scale. They know CVSS is a blunt instrument. They understand that exposure must be validated continuously rather than reviewed quarterly. Conceptually, CTEM makes sense. In practice, continuously proving exploitability inside a live environment is far harder than most frameworks imply.

Complexity Has Crossed the Threshold

The research shows that once environments exceed a certain complexity threshold, the likelihood of attacks accelerates. More domains create more assets. More assets create more identities, integrations, and misconfigurations. At scale, manual reasoning collapses under its own weight.

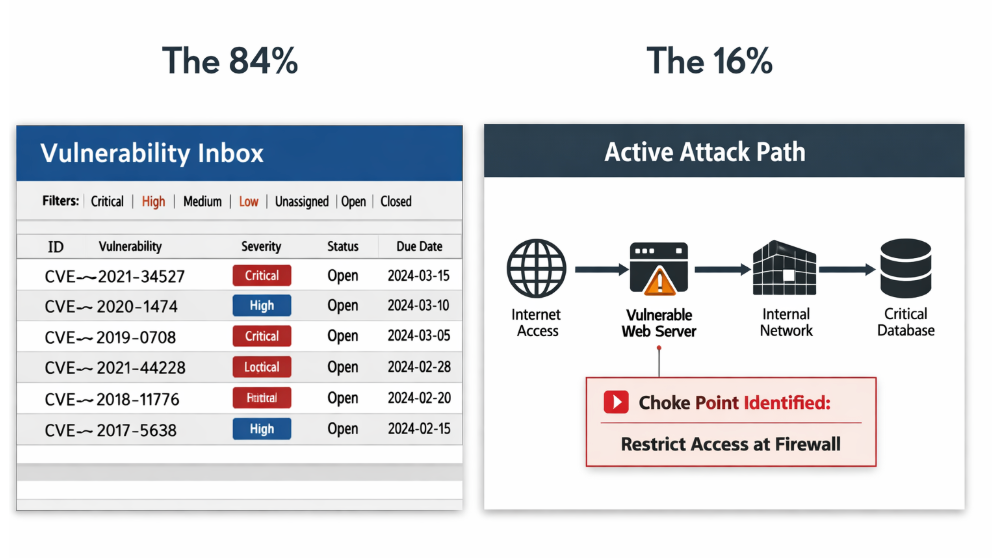

The deeper problem isn’t complexity itself. It’s that most security programs still evaluate risk in isolation. Vulnerabilities are scored independently. Cloud misconfigurations are reviewed in their own dashboards. Identity issues live in separate workflows. Each team sees its slice of the problem, but attackers don’t. They chain weaknesses together. An internet-facing application, a vulnerable component, permissive network access, and a reachable virtual machine. None of it looks catastrophic on its own. Combined, it forms a working exploit path. If you’re counting findings instead of validating chains, you’re managing noise, not risk.

The 16% Prove Exploitability

The organizations pulling ahead aren’t asking “Is this vulnerable?” but “Is this reachable in our environment today?” Answering that requires continuously modeling identities, assets, permissions, and controls together and validating whether an attacker can actually move from point A to point B. It means prioritizing based on exploitability rather than theoretical severity. It means removing paths, not just closing tickets.

That shift sounds subtle, but it changes the job. You stop reporting risk and start proving it. This is where most CTEM efforts stall: correlating cloud, SaaS, identity, endpoint, and application data into a living exposure model requires more than dashboards; it requires a system that can reason across domains and continuously validate attack paths. That is the difference between awareness and operational reality.

From Exposure Visibility to Exposure Reduction

Ask yourself a simple question: If something were compromised today, what damage is actually reachable from that point? Most organizations can’t answer that without manual coordination across multiple teams. Even fewer can answer it continuously.

CTEM is meaningful only when exposure validation directly links to response and hardening. When a path is proven reachable, it’s broken. When an incident is confirmed, the underlying conditions are adjusted to prevent it from recurring. The system documents exposures and then removes them. That’s the difference between reducing alerts and reducing risk.

The Divide Will Only Widen

Below a certain complexity level, manual oversight still appears sufficient. Above it, periodic controls and isolated tooling quietly stop scaling. The divide highlighted in this research is not temporary. It reflects a structural shift in how modern security programs must operate.

The leaders who pull ahead will not be the ones with the most tools. They will be the ones who can continuously prove what is exploitable in their environment and systematically eliminate those paths before they are used. If your CTEM program can’t continuously prove what is exploitable and systematically reduce those paths, you don’t have CTEM. You have reporting. That’s the divide.

The Next Divide Is Already Forming

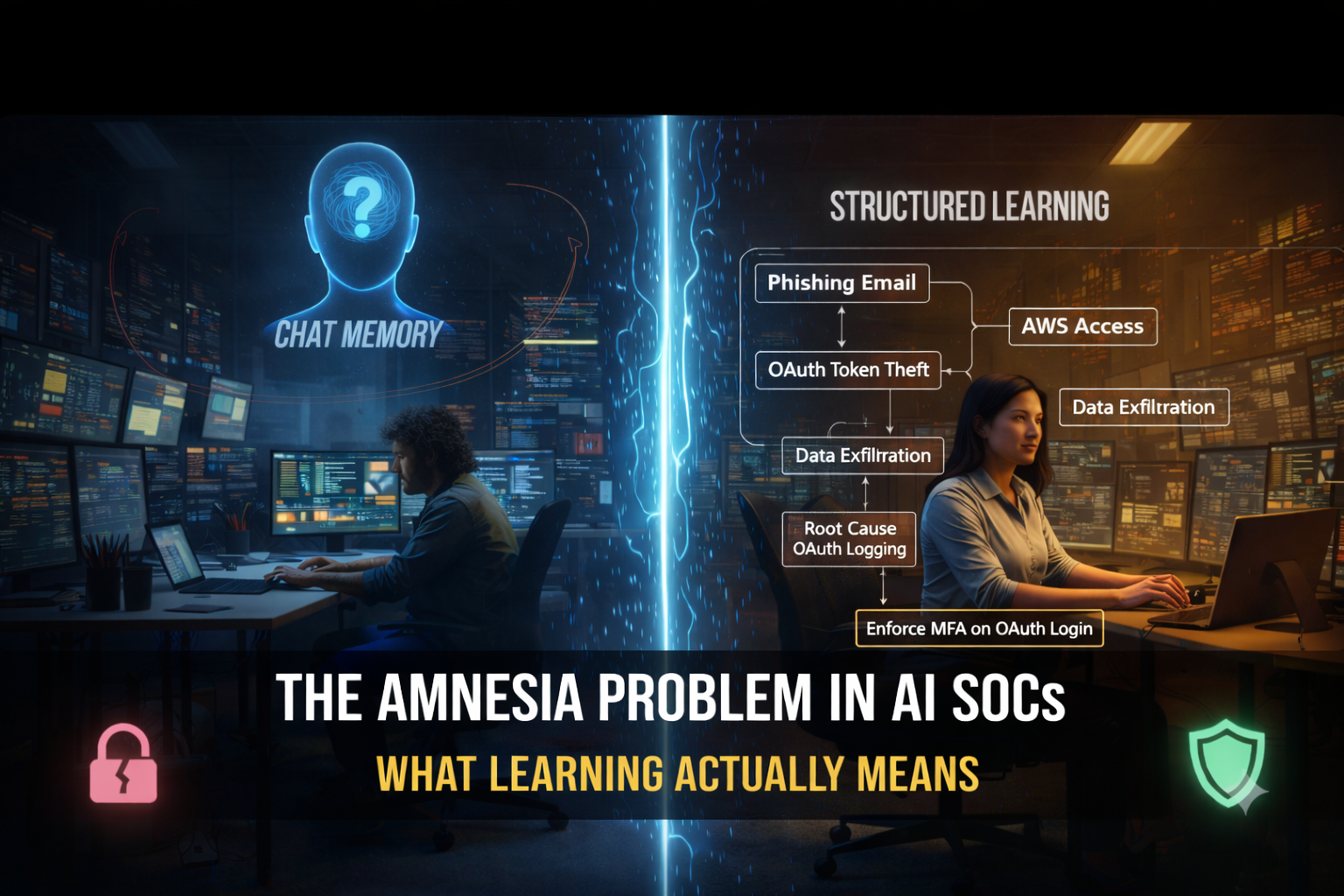

Even among the 16% who have operationalized CTEM, not all implementations are equal. Many programs still rely on human-led prioritization workflows. They correlate signals, improve visibility, and generate better-ranked tickets, but the validation and execution layers remain manual. That works up to a point. As environments evolve continuously, exposure signals multiply with changing identities, infrastructure shifts, and expanding SaaS permissions, while attack paths form and dissolve in hours. Review cycles were designed for static infrastructure. Modern environments mutate faster than governance cadences. CTEM becomes bottlenecked by its own success, and this is where the next stage begins.

The Agentic Leap

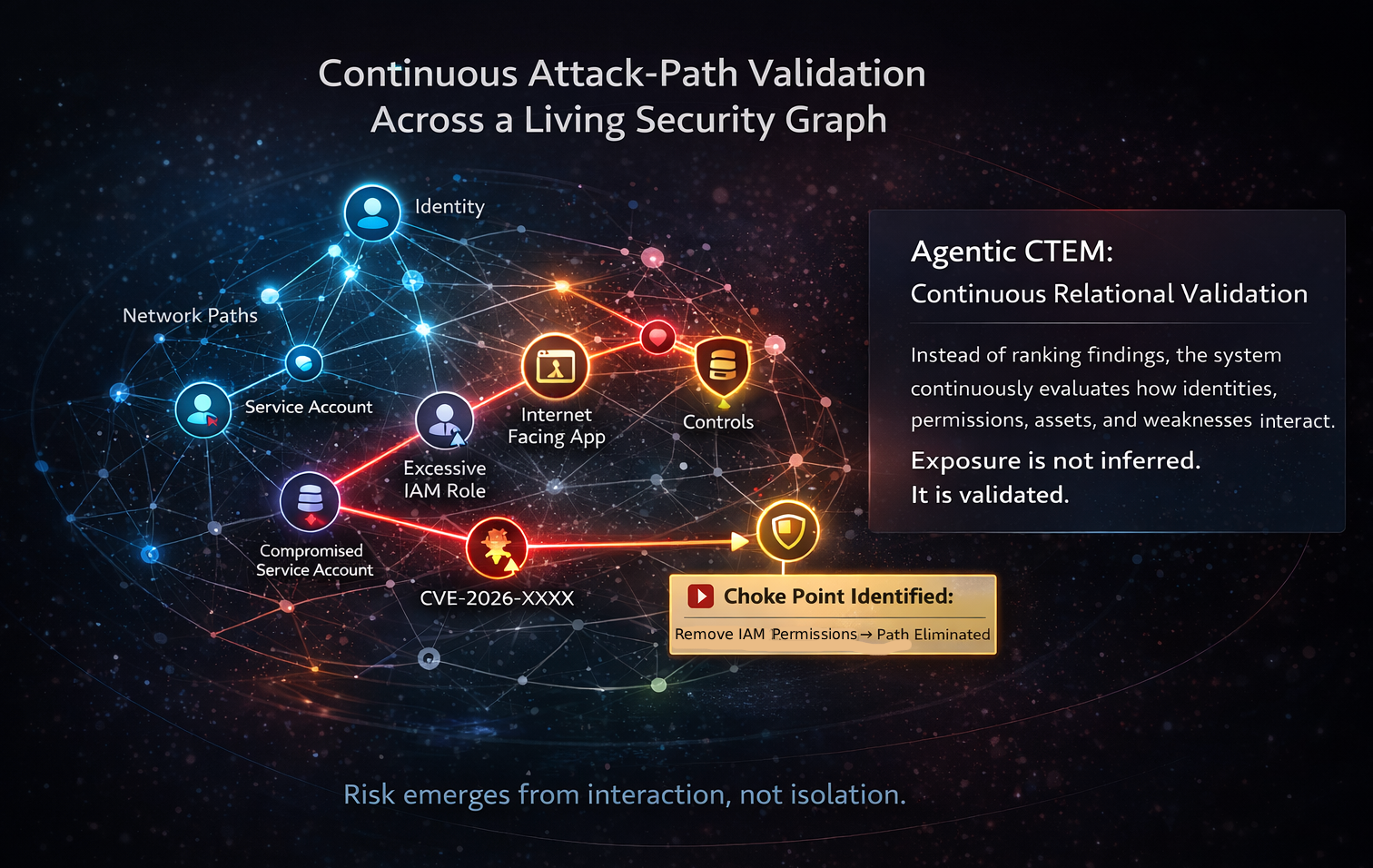

Traditional CTEM improves how we prioritize exposure, and the 16% of users are seeing the benefit. But Agentic CTEM changes how exposure is understood. At its core, the shift is not about automation. It’s about the continuity of reasoning.

Instead of evaluating signals in batches, an agentic model maintains a living representation of the environment. Assets, identities, permissions, controls, and relationships are not separate data feeds. They’re part of a unified context that is continuously evaluated as conditions change. In that model, exposure is no longer inferred from static attributes. It is validated through relational analysis. Can this identity traverse these permissions? Can this workload reach that asset? Does this misconfiguration meaningfully connect to an exploitable chain?

The system ranks issues and evaluates combinations. It re-evaluates as the graph shifts. It understands that risk emerges from interaction. Visibility scales linearly. Attack paths don’t. Instead of arguing over severity scores, security teams receive a defensible statement: this path is reachable, this identity enables it, this control breaks it. That shifts the conversation from theoretical risk to operational reality.

Why This Matters

Vulnerability management created a decision gap. Teams addressed what was visible and measurable, but cross-domain exposure often remained untouched. CTEM narrowed that gap by reframing risk around exposure rather than raw findings. Agentic CTEM closes the loop by ensuring exposure is continuously validated as the environment evolves, rather than periodically reassessed after the fact. One model improves prioritization, while the other sustains proof. As complexity increases, proof becomes the only stable currency. That is the next divide.

.png)