Food for Thought: Turning Security Data into a Smarter Diet for AI

Let’s not kid ourselves. AI won’t be running your cybersecurity program anytime soon, but it’s getting closer to becoming the brain behind it. But like any brain, it needs fuel. It doesn’t run on GPUs alone, and it certainly doesn’t get smarter just by eating more raw data. What fuels real intelligence is a structured, contextual diet: clean, connected, and enriched security data.

Cybersecurity, like every other industry, is now flooded with AI hype. But amid all the noise, AI can be a powerful ally, if only we stop ignoring a huge question:

What is our AI being fed?

If it’s just logs and alerts without the vitamins and minerals (context and connection), we’re not nourishing intelligence, we’re stuffing it with over-processed noise. And malnourished AI doesn’t get smarter, it hallucinates, misleads, and clogs your queue.

Let’s walk through why an AI diet consisting of your security data is the single biggest driver of health and how it can deliver positive results rather than just bloat.

Is AI Smart Without Context?

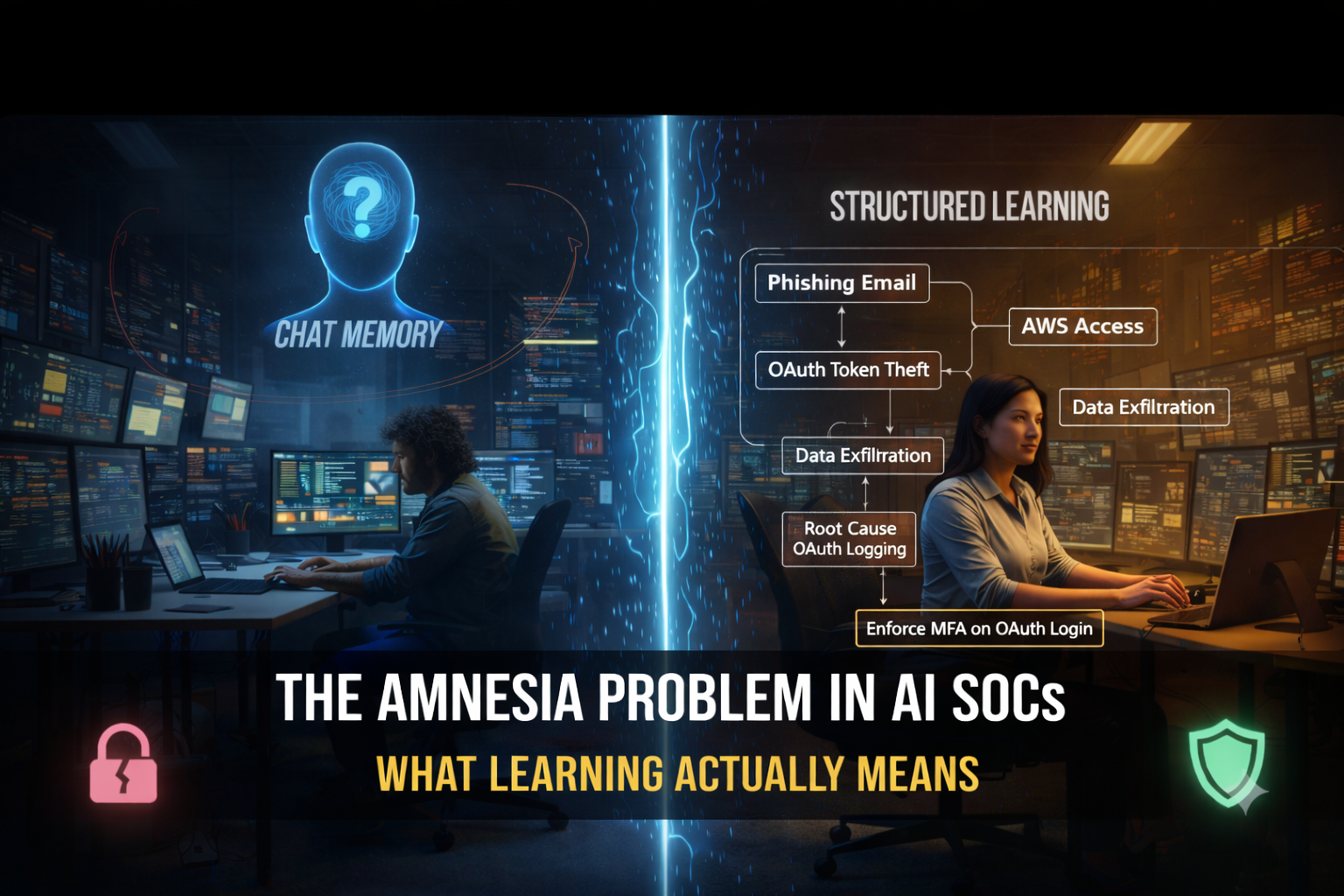

What happens when we throw a large language model (LLM) at raw security logs? Does it automagically produce intelligence? Per our experience, all it produces are summaries, labels, and, more often than not, hallucinations.

This typically happens because, well, think about it, without context, how can AI understand what it’s seeing? How would it be able to distinguish a critical path from background noise? It wouldn’t have any understanding of which identity has admin rights or which workload is exposed to the internet. All the AI would see is text.

Context then becomes everything. And context resides in your systems of record, including vulnerability scanners, EDR, SIEM, IAM, CSPM, firewalls, and cloud workloads, among others. But frankly, even that isn’t enough. To be useful, AI must understand how these pieces connect.

- Is this alert on an asset that’s internet-facing?

- Is this CVE reachable from an external path?

- Do I already have a control in place that mitigates it?

Without these connections, AI becomes just another alert engine. With these connections, your AI becomes a decision-making engine.

The Missing Ingredient: Semantic Normalization

Just because we have the right ingredients doesn’t mean we know how to cook.

Security tools are like grocery stores; you have your ingredients, such as alerts, scan results, and a log or two in JSON. But without a recipe tying it all together, your AI is left trying to make a meal from a pantry of unmarked cans. Not good eating.

This is where we’ve seen many cybersecurity data pipelines fall short. They feed AI agents a mess of logs, asset lists, alerts, and tickets without structure. There’s no relationship or understanding of what connects to what, or why it matters.

AI needs to eat data. However, to consume the right data, it requires semantic normalization, which is a data fabric that organizes and interprets security inputs within a shared schema, and it knows:

- Which asset belongs to which business service

- Which controls are covering which vulnerabilities

- Which identities have access to sensitive data

- Which external paths lead to internal risk

When you normalize that data, your AI starts to eat the right ingredients and begins to understand the whole, healthy recipe. It can reason, correlate, and ultimately act with confidence.

Context isn’t a nice-to-have. It’s the difference between hallucination and decision.

Simulation Is How AI Learns to Digest

Alright, so we’re getting our AI healthy by eating clean, normalized, contextual data. But much like the voice we hear in the back of our heads every time we eat a salad, “eating well is just the first step.” To grow stronger and more effective, it needs to digest that data. It needs to learn from it, make connections, and act on what it sees, to grow stronger from that data.

That’s where simulation comes in.

We don’t want to stop at summarizing or labeling what the AI has ingested. But to move from passive insights to real outcomes, AI needs to continuously rehearse the threats, just like a body builds muscle by working out, not by reading a fitness blog.

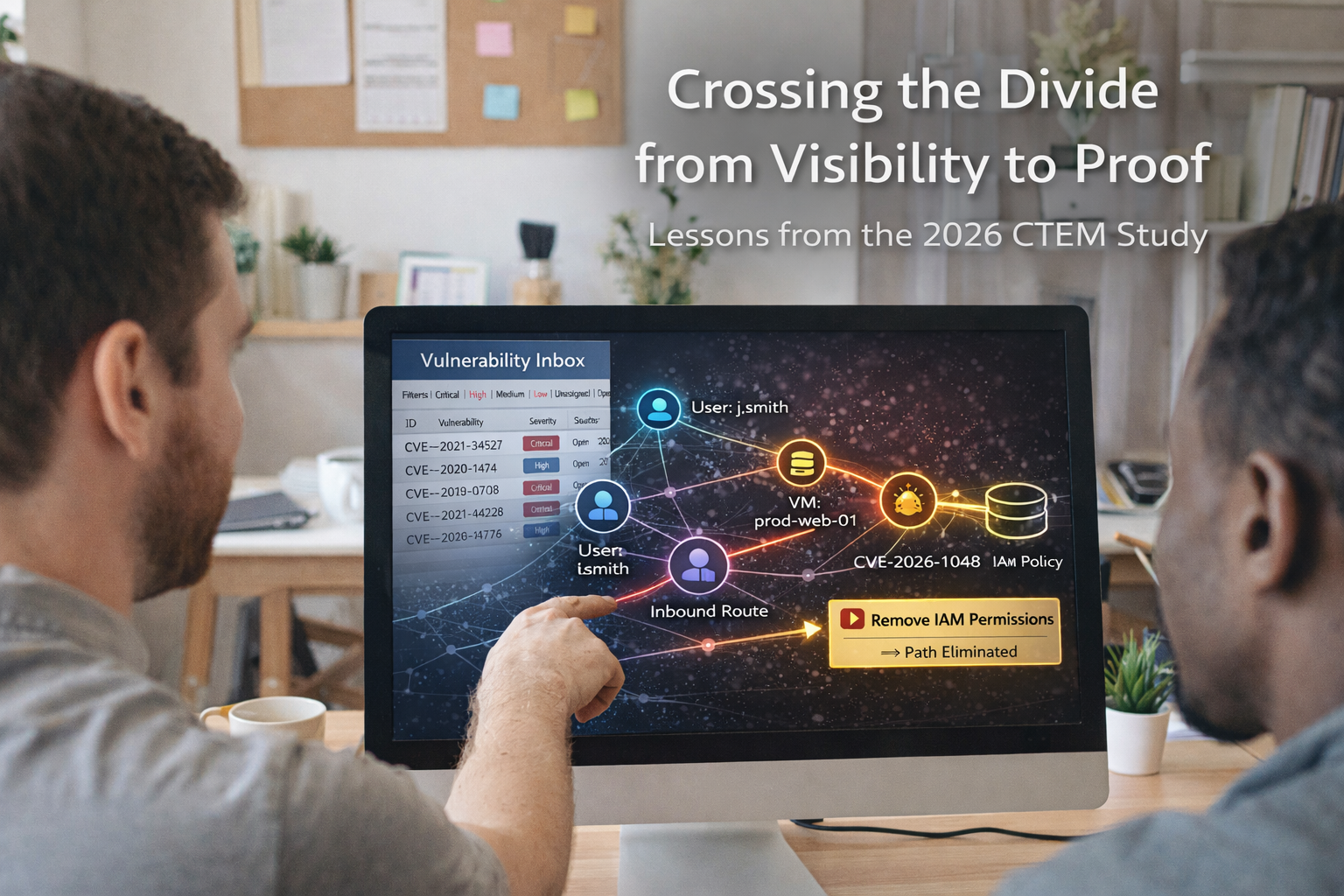

Platforms like Tuskira don’t just label a vulnerability “critical.” They simulate how an attacker would actually exploit it:

- Would the attacker come in through an open port in the cloud?

- Would they laterally move to an unpatched database?

- Would your controls stop them, or silently fail?

AI learns by doing. Simulation provides that training ground for it to build those muscles.

And when we practice for the game, it comes on a practice field. Similarly, we’re running these scenarios in a digital twin of your environment that is a safe, real-time replica of your actual systems. AI can test what works, what fails, and where defenders should focus their efforts. We’re no longer theorizing. Now we have validated strategy, practiced or built on lived experience.

That’s how AI becomes healthy, takes actions, and proves its recommendations through simulated battle.

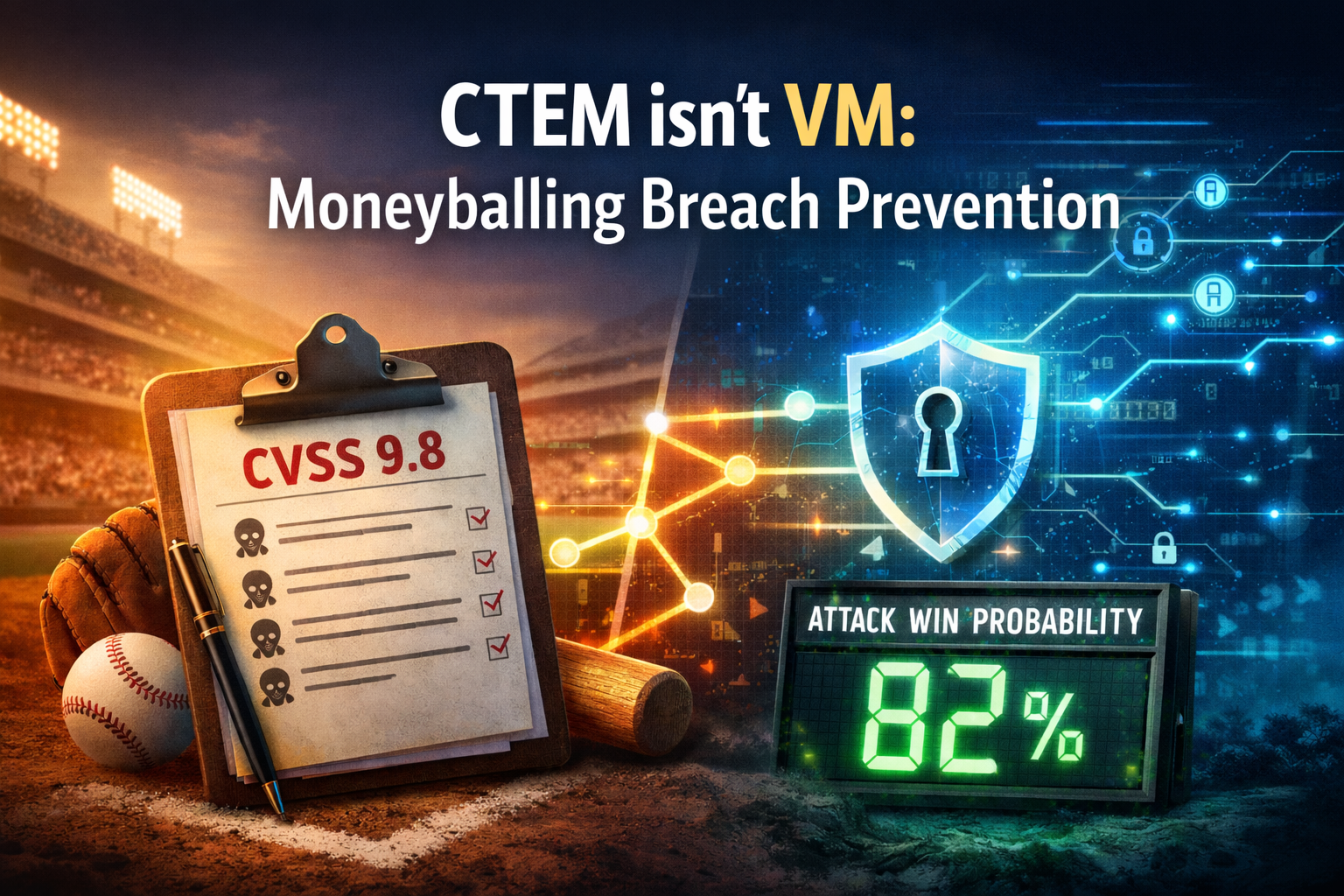

Data Is the New Detection Rule

For years, the backbone of cybersecurity has been detection rules: if X happens, trigger Y. But as modern threats and environment sprawl have shown us, those rules alone can’t keep up.

With AI, data quality has become the new detection logic.

Do we care about how many rules have been written? Or do we care how complete, accurate, and connected your underlying data is? The smartest AI in the world can’t compensate for blind spots, siloed logs, or stale asset inventories, just like no chef can cook a great meal with expired ingredients.

So instead of asking: “How smart is your AI?”

We should be asking: “What is my AI seeing, and how well does it understand it?”

When it comes to AI, we need to stop thinking that more parameters equal more value and instead focus on whether it delivers accurate, actionable, and trustworthy results within our environment.

We want to know:

- Can AI reduce false positives?

- Can AI help us respond faster?

- Does AI understand our specific environment?

- Can I trust AI’s decisions?

If you want meaningful outcomes from AI, look at the menu. AI won’t thrive on raw logs or empty calories. It needs security data that’s clean, connected, and contextual. The kind it can digest, reason over, and act on. Feed it junk, and you get bloated noise. Feed it right, and it prioritizes, defends, and accelerates response.