What Happened When a CISO Hired AI into His Team?

TLDR: This is a story of a CISO dealing with an AI attack. In late 2024, a CISO faced an AI-powered breach that bypassed every layer of his security stack. Patch cycles failed. Tools missed it. The team was drowning in noise. Within a week of deploying Tuskira’s AI Analysts, they cut false positives by 95%, slashed triage by 80%, and neutralized the next AI-driven payload before it spread. This is what happens when AI works with you, not against you.

In late 2024, the CISO of a global logistics company thought he had seen it all.

His team ran a tight ship. Regular patch cycles. A mix of best-of-breed tools across SIEM, EDR, WAF, VM, and CSPM. Strong identity governance, solid coverage across cloud and hybrid workloads. They even ran purple team exercises twice a year. It wasn’t perfect, but it was disciplined.

Then came the breach, not through phishing or a missed CVE, but through an intelligent, quiet chain of AI-generated anomalies.

It Started With Silence

The attacker didn’t trip their alarms. Instead, it mimicked legitimate employee behavior. It moved laterally through misconfigured SaaS accounts and lightly monitored cloud functions. Within hours, it chained together low-severity, unprioritized vulnerabilities. None of the tools caught it. None of the alerts were escalated.

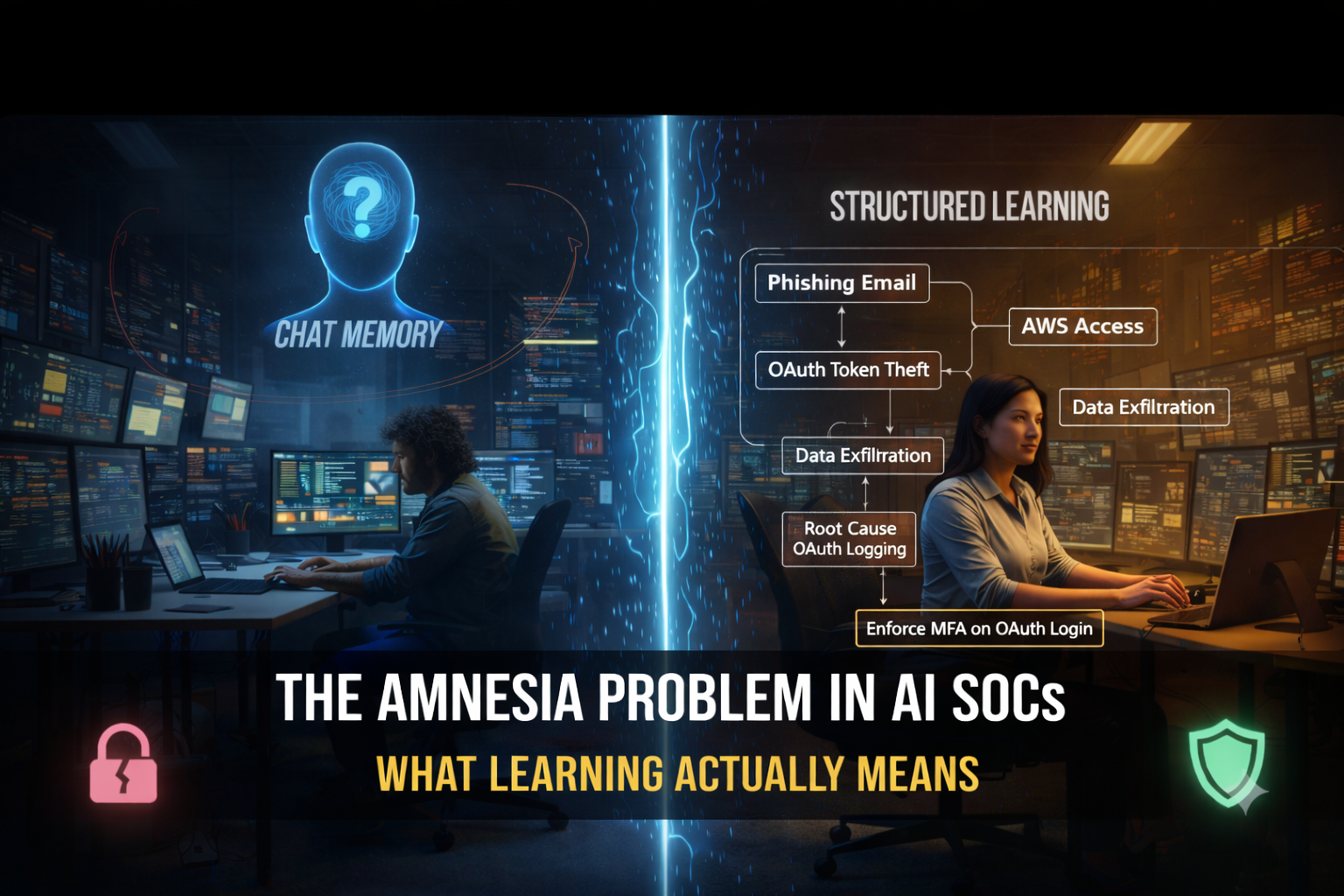

The postmortem revealed that the attack exhibited characteristics of an AI-driven campaign. The speed of lateral movement, the rapid chaining of seemingly insignificant vulnerabilities, and the adaptive nature of the attack patterns suggested an autonomous system capable of learning and reacting in real time, far exceeding normal capabilities.

This security team was up against an autonomous adversary, trained on years of breach data, capable of launching and iterating at a scale their defenses couldn’t match.

The Breaking Point

The CISO gathered his team. They were exhausted. Alert queues had spiked 4x. Every triage cycle felt like a losing battle. Worse, they didn’t even know which alerts mattered. CVSS scores failed them. Tool coverage didn’t align. The team was responding hours after the attacker had already moved.

It wasn’t the tools failing; it was the model itself. Their defenses were being evaded.

Security was reactive, without proper insight into whether what they were patching was exploitable or not. Were they missing what was exploitable? The team quickly realized they were under-resourced, and they were solving the wrong problems.

The Move to AI Analysts

That’s when we met. This global logistics company wasn’t looking for another dashboard, detection tool, or alert reduction platform. They needed something fundamentally different:

- A system that knew what mattered in their environment.

- An approach that detected, validated, and acted.

- A way to close the loop between signals, posture, and response.

They had all the right tools, but they needed more capacity. More certainty. More speed. And they couldn’t hire their way out of the problem. So they hired AI.

Tuskira’s AI Analysts were brought in as part of the team to augment their human experts with relentless, role-specific execution.

This required a shift from static scoring to continuous simulation. From alert-centric to exposure-aware.

Step 1: Unify the Stack into a Security Mesh

We began by connecting their existing tools, SIEM, CSPM, EDR, IAM, VM, and more, into a single ingestion layer. Data from their tools was normalized, de-duplicated, and enriched into a security mesh to create a real-time view of their infrastructure.

This security mesh is an AI-curated model of assets, controls, signals, and gaps across cloud, on-prem, and SaaS environments.

Real Talk: Integration isn’t magic. Though we have native integrations with 150+ tools, we didn’t hook up 150+ tools overnight. We started with their key systems, Splunk, CrowdStrike, Azure AD, and Tenable. Prebuilt connectors handled ingestion. Within hours, his environment started taking shape. That’s how the mesh grows, incrementally, based on priority and live telemetry.

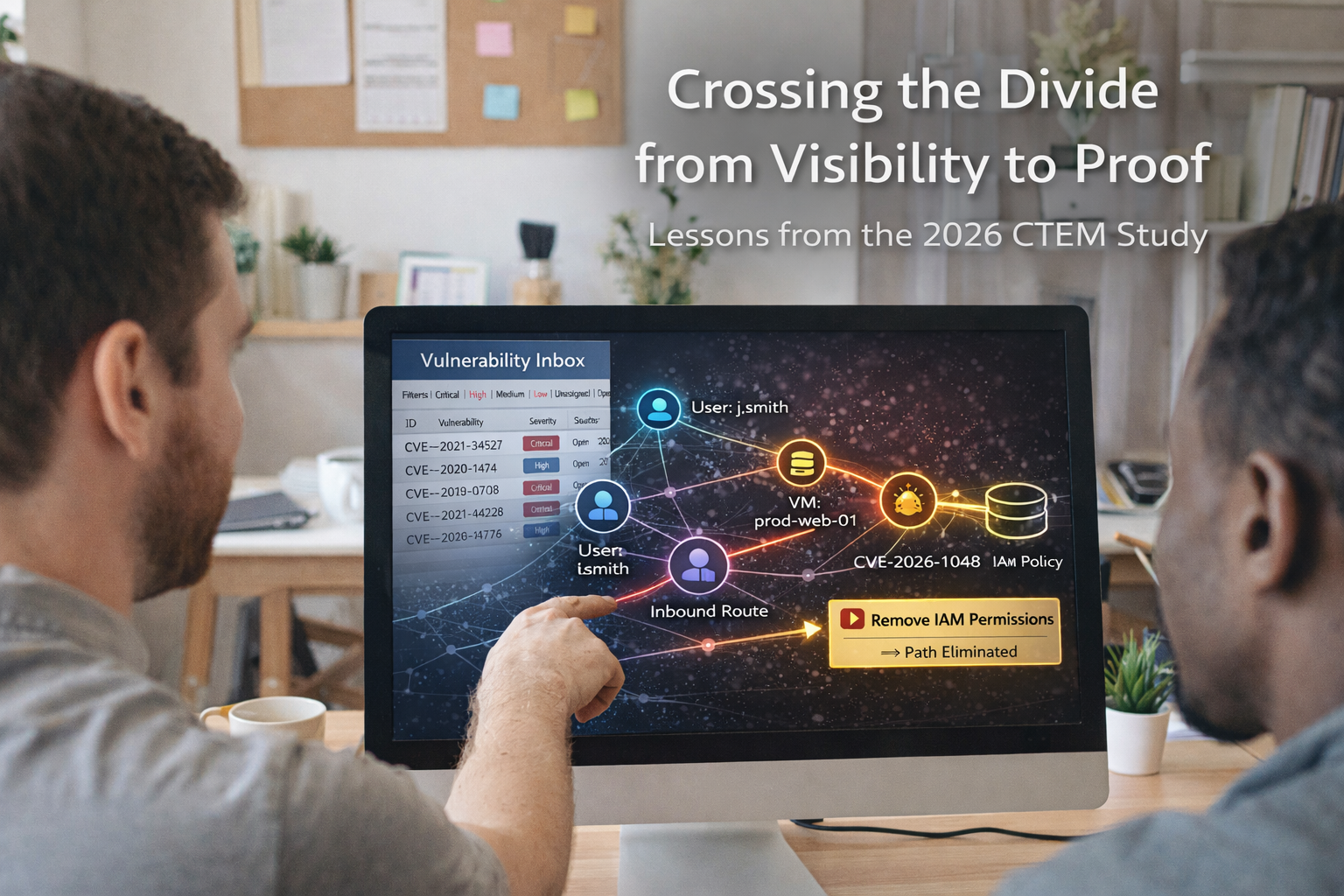

Step 2: Build a Live Digital Twin

Using this telemetry, we constructed a digital twin: a live, dynamic model of their environment, including asset relationships, identity paths, control coverage, and external exposures.

This allowed us to simulate how the AI attack would move:

- Which identities were over-permissioned

- Which vulnerabilities were reachable

- Which alerts mapped to actual risk

Yes, it’s live! The twin isn’t a snapshot, it mirrors the customers’ environment in near real time. When identity roles change in Azure AD or firewall configs shift, the twin updates. We didn’t ask their team to manually draw their architecture as we discovered it dynamically, from the systems already in use.

Step 3: Simulate Attack Paths and Validate Defenses

With the twin in place, we ran continuous attack simulations based on real-world IOCs, TTPs, and even live dark web data. We recreated the exact attack chains that had compromised their systems.

How real are the simulations? We didn’t just run canned exploits. We emulated attacker behavior, chaining identity misuse, lateral movement, and exploit validation, all of which were verified using live IOCs.

The simulations revealed three critical truths:

- 93% of alerts were non-exploitable.

- Over 40% of "patched" CVEs were still exposed due to failed or misconfigured controls.

- Detection rules were misaligned with attacker behavior.

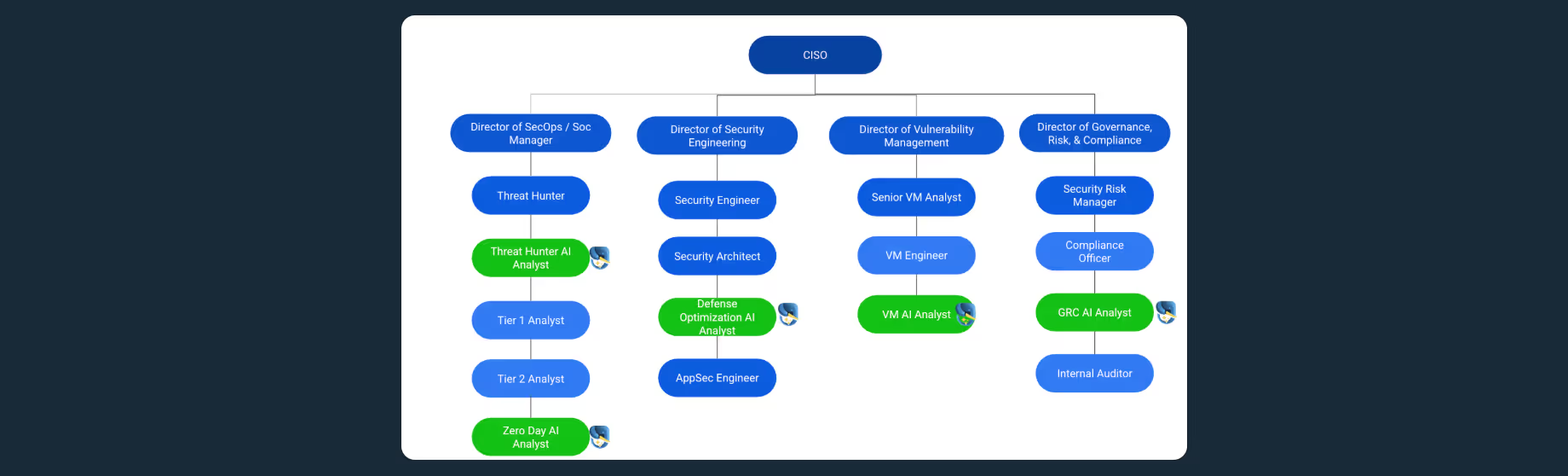

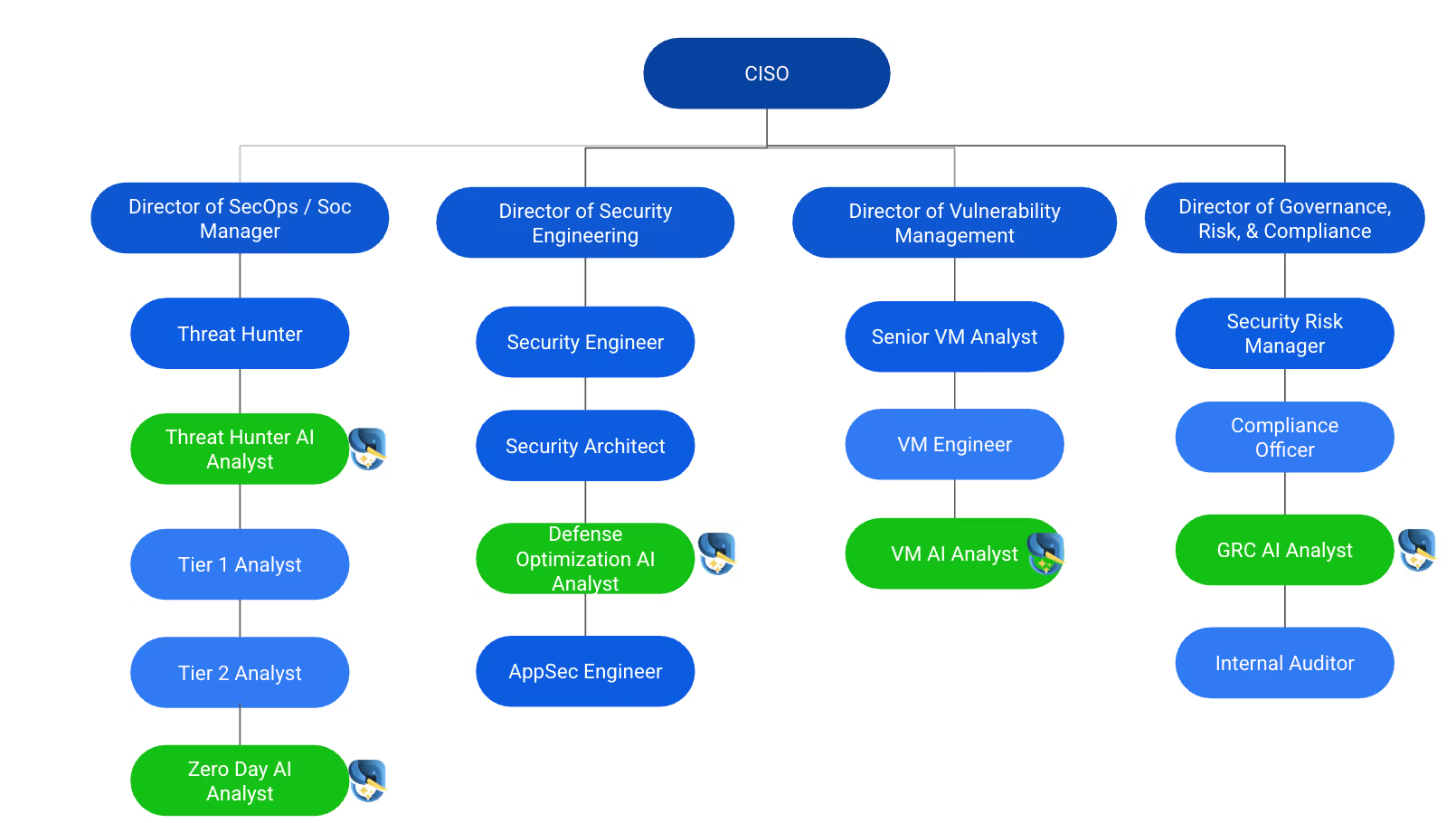

Step 4: Deploy Role-Specific AI Analysts

To scale defense, we deployed a workforce of virtual AI analysts, each trained to perform a core security function continuously:

- Zero Day Analyst to detect and simulate novel threats based on emerging behavior.

- Threat Intel Analyst to correlate IOCs and map emerging threats to internal exposure.

- Vulnerability Analyst to prioritize real exploitability over severity scores.

- Defense Optimization Analyst to tune SIEM, EDR, and firewall rules in real time.

- GRC Analyst to map findings to frameworks and generate defensible evidence.

How autonomous are they? The security team had the final say. The AI Analysts operated under strict thresholds and optional approval gates. Each tuning suggestion, whether to a SIEM rule or firewall policy, came with a validation log from the digital twin. When the Zero Day Analyst flagged a new lateral movement chain, it didn’t just alert, it simulated, explained, and proposed a fix. No guesswork.

Each analyst operated autonomously, learning from prior detections, validation outcomes, and system feedback. They collaborated in the background, sharing findings, refining posture, and executing policy adjustments across the stack.

The Result: Response That Finally Kept Up

Less than a week:

- Their false positive rate dropped by 95%

- His team reduced manual triage by 80%

Less than a week? For real. The CISO scoped the rollout to a focused subset: one cloud tenant, one high-priority app, and top-tier detection tools. That’s why the outcome was fast. The goal wasn’t to boil the ocean. It was to prove that AI Analysts could see what his team couldn’t and act faster than they could.

More importantly, the next time an AI-generated payload hit their environment, the system detected it, simulated its path, verified defenses, and initiated mitigations across firewall, EDR, and IAM automatically.

Fighting Fire With Fire

AI is a dangerous adversary.

This security team brought AI to the fight, not to replace their team, but to work alongside them, shoulder to shoulder and dramatically augment their efficiency.

That’s what we’re building. Not another interface. An autonomous, operational force that integrates with your tools and team to work together, faster, smarter. The next step isn’t another tool. It’s hiring the only teammates who never sleep, never burn out, and defend in real time. That’s Tuskira. Your next security hire should be AI.