AI Agents in Cybersecurity: The Industry is Further Along Than You May Think

Earlier this week, R Street Institute released one of the most comprehensive breakdowns I’ve seen on the current and future state of agentic AI in cybersecurity. Titled "The Rise of AI Agents: Anticipating Cybersecurity Opportunities, Risks, and the Next Frontier," the report goes deep into both the promise and perils of deploying autonomous AI agents. It deserves the attention it’s getting.

Rather than summarize it for you, I want to respond to it. Because while I think R Street nailed the foundational points, I also think we’re further along as an industry than the report implies in some key areas, especially around operationalization.

Where R Street Nails It

1. The Four-Layer Framework is Useful and Actionable

R Street's division of agentic AI into four core layers (Perception, Reasoning, Action, and Memory) is one of the clearest mental models I’ve seen. It provides a structured approach to identifying where risks can arise, assessing the capabilities in place, and determining what to secure at each level. This is extraordinarily useful and crucial for designing the architecture and the guardrails.

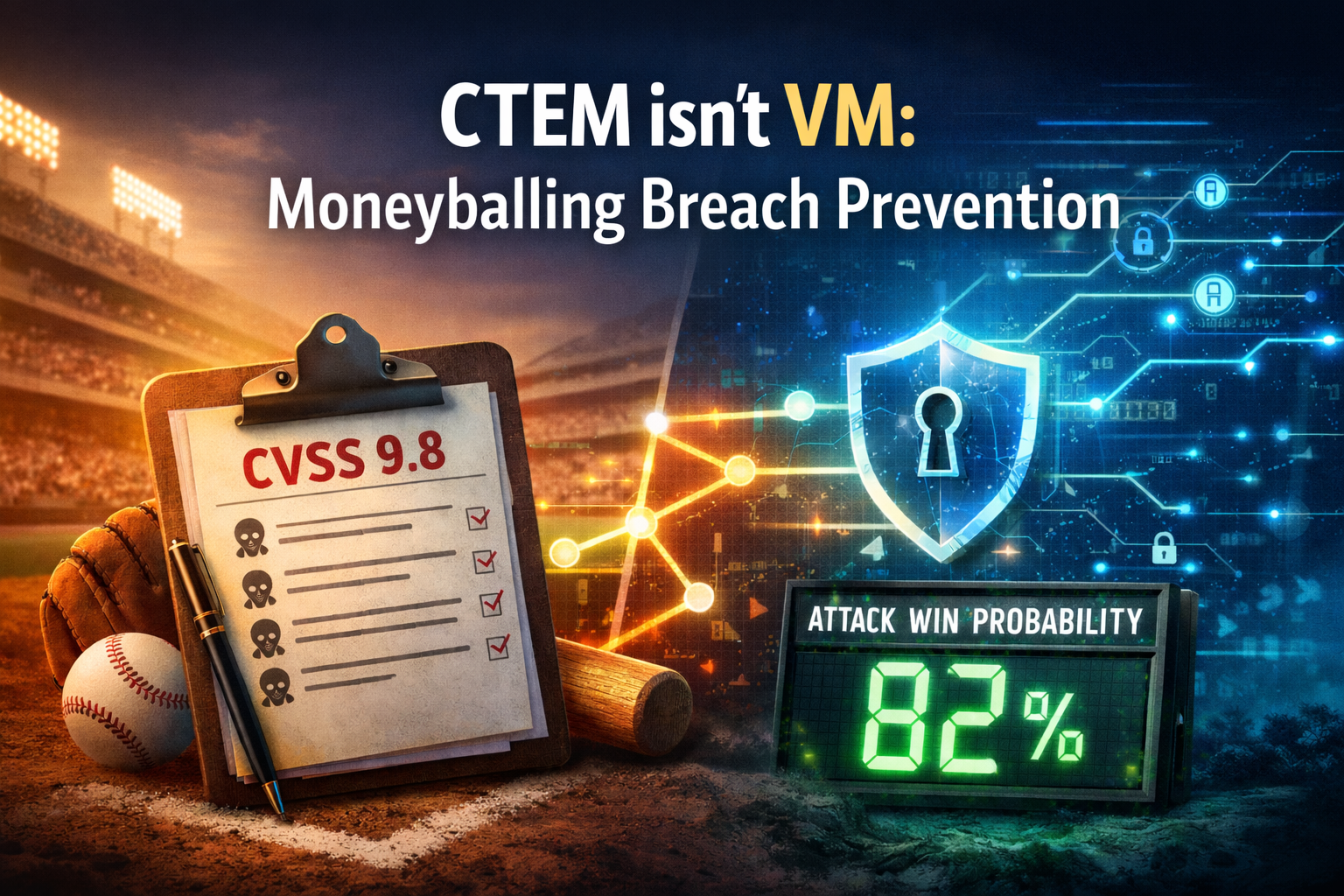

2. The Threat is Real, and the Need is Urgent

The report gets straight to the point that our cybersecurity infrastructure is overwhelmed. Alert fatigue, staffing shortages, and tool sprawl are pushing teams to the brink. Agentic AI is a necessary force multiplier. R Street rightly positions agents not as a replacement for analysts, but as teammates who work continuously and learn over time.

3. Hallucinations Are a Serious Problem

The section on hallucination and reasoning drift is fantastic, as it lays out the complications and importance of addressing the potential issues. Unlike passive AI systems, agents can do things like trigger responses, tune controls, and reclassify vulnerabilities. If their output is wrong, the consequences are operational. R Street’s emphasis on validation layers, transparency, and memory hygiene is spot on.

4. Policy Has to Catch Up

From voluntary guidelines for agent deployment to the need for traceability and agent IDs, R Street's policy recommendations are smart, flexible, and timely. They understand that regulating agentic systems isn’t about holding them back, but about alignment.

Where the Industry is Already Ahead

While the report focuses heavily on potential and emerging capabilities, some of the best practices they recommend are already live in the production platforms of us and, unfortunately, our direct and indirect competitors. Here’s where the AI frontier isn't theoretical anymore:

1. Validated Reasoning Is Fast Becoming Table Stakes

R Street rightly calls out the danger of agents hallucinating and acting on unverified conclusions. That’s a real concern in general-purpose LLMs. But in production security environments, validated reasoning is the baseline.

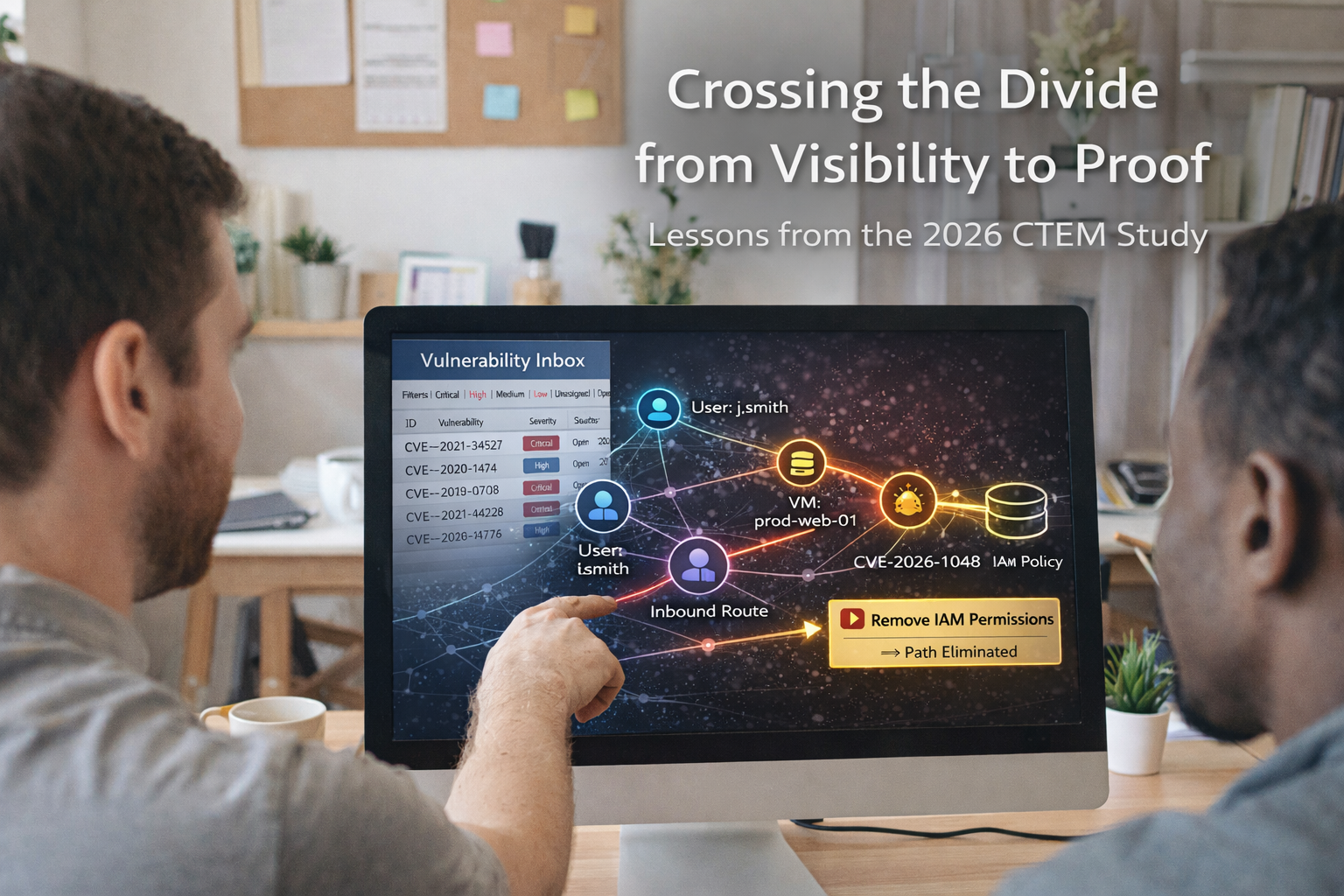

Take Tuskira, for example: Before an agent suppresses a detection rule or escalates a CVE, it can simulate the attack path, verify whether the vulnerability is reachable, and check if compensating controls already mitigate it. If the conditions don’t match, the agent doesn’t act. No guesswork. No blind trust.

Every recommendation passes through a real-world validation loop: simulate the threat, correlate it with live telemetry, and confirm that the proposed action reduces actual risk. If that validation fails, the agent replans autonomously.

Insights may be AI-generated. But actions are reality-checked.

2. Action Modules Already Operate with Guardrails

The report emphasizes the importance of secure execution layers. In operational platforms, agents don’t just fire off playbooks based on prompts. Actions are gated by context-aware checks, such as whether the asset is production-facing. Does it belong to a critical business function? What role does the exposed identity have?

These decision gates are implemented today.

3. Digital Twins Aren’t Theoretical

A huge challenge in reasoning about security is that environments are too fragmented to simulate risk effectively. R Street discusses this in the context of the perception layer.

But leading platforms are already building live digital twins, which are real-time maps of cloud assets, network topology, identities, and controls. This lets agents test hypotheses and simulate threats in a safe, mirrored environment before recommending or executing any response.

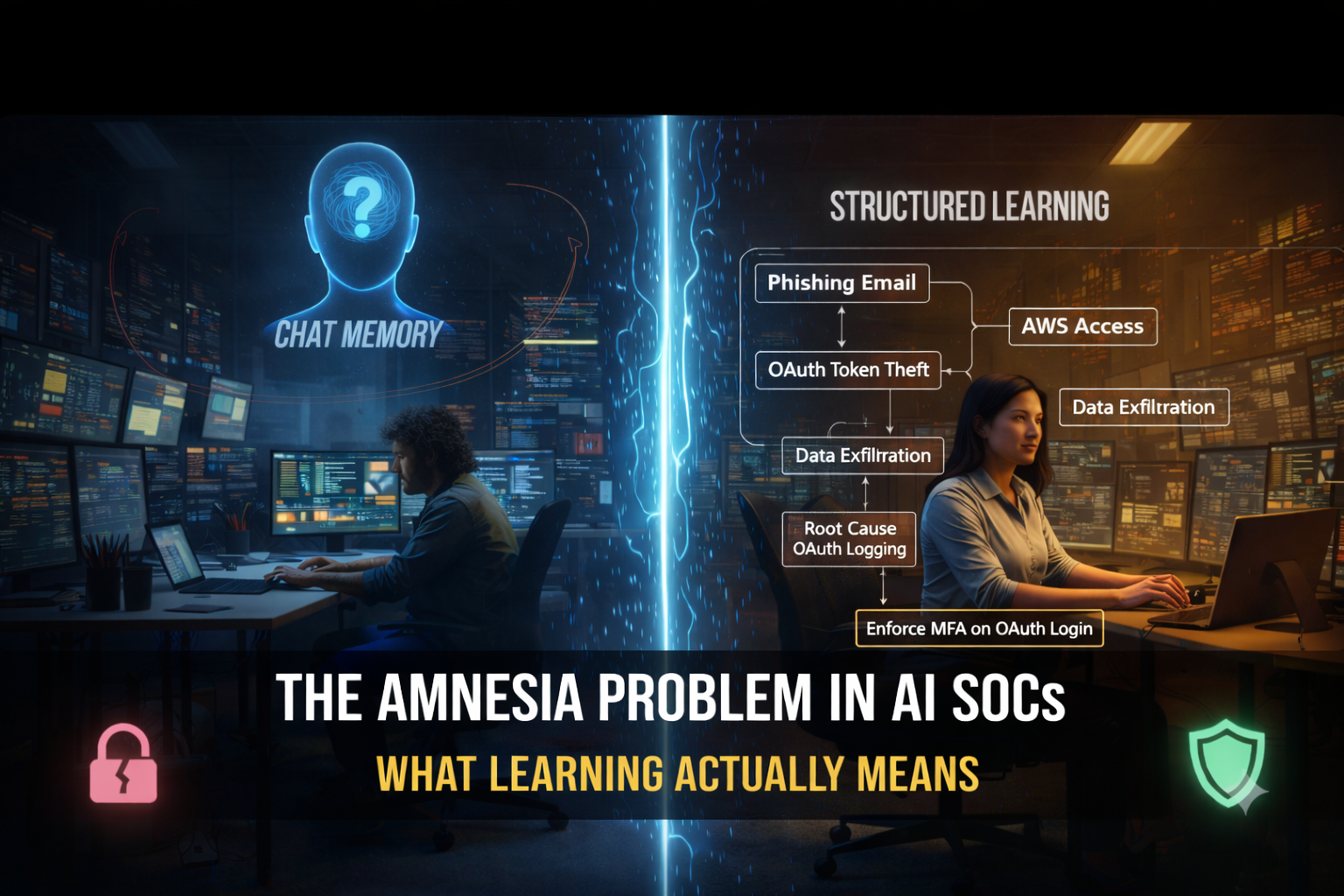

4. Memory Isn’t Just for LLMs

The memory layer is portrayed as a future aspiration. But in practice, memory is already being used in production to:

- Track prior mitigation decisions and their outcomes

- Recall why a rule was tuned (and by which agent)

- Maintain reasoning trails that analysts can audit

It’s not just about long-term context; it’s about operational memory that earns trust over time.

So Where Do We Go From Here?

If R Street’s paper has a message, it’s this: agentic AI is no longer hypothetical, and the stakes are rising. However, it also highlights how easily one can discuss the potential of agentic systems without acknowledging that many of these capabilities are already underway.

What we need now is a shift in conversation:

- From "what if agents could triage threats?" to "what are your agents doing today?"

- From "can we trust them to act?" to "how are they proving their actions are valid?"

- From "here’s a new dashboard" to "here’s a teammate who closes the loop"

R Street sets the stage well. Now it’s on all of us, builders, practitioners, and policymakers, to define how this next phase of cybersecurity should work.

And it’s already started.

Want to dig deeper into the R Street framework? You can read the full report here.